Robots are chewing up more newspaper and magazine headlines as we continue on this transition to a new society. The articles run a continuum of utopian and dystopian scenarios--I tend to be attracted to the dystopian ones. Why? Because it's easy to be optimistic about something that's new. It's easy to accept technological changes as, by virtue of being technological, better, more advanced, and of a higher state of art--but this is simply wearing rose coloured glasses while a member of the march of folly.

Robots for decades have been mostly presented as these endearing tin cans bursting with personality; and there is a sense in which that remains the case. In a recent article in MIT's lay-person magazine, Tech Review, an algorithm out of Stanford is providing robots with an ability to learn from its interactions with humans and adjust its behaviour.

Robots for decades have been mostly presented as these endearing tin cans bursting with personality; and there is a sense in which that remains the case. In a recent article in MIT's lay-person magazine, Tech Review, an algorithm out of Stanford is providing robots with an ability to learn from its interactions with humans and adjust its behaviour.

[Stanford researcher Silvio] Savarese and colleagues developed a computer-vision algorithm that predicts the movement of people in a busy space. They trained a deep-learning neural network using several publicly available data sets containing video of people moving around crowded areas. And they found their software to be better at predicting peoples' movements than existing approaches for several of those data sets.

The only existing precedent for predicting such movements is Google's self-driving car, which has had its share of accidents as a sign of the difficulty of teaching robots how to maneuver around the caprice of human freedom. Questions such as how to share a road or sidewalk, or when to take your turn and when to wait remain issues for robots as they learn about interacting with humans.

One company, Starship Technologies, makes robots for delivery service companies. It's been testing robots in the UK and the U.S., and claims that interactions with human beings remains the biggest challenge. Starship Technology's robots have come in contact with 230,000 people, and they're mock deliveries are closely monitored by engineers.

But the real leap in technology is going to be creating learning algorithms that give robots the intelligence to blend in and adapt to human behaviour, thus replicating the norms of human behaviour. What stands out for me in this scenario is the distinction between human and robot/computer behaviour may growing slimmer--not because robots are adapting to us, but because we are adapting to our devices. In many ways, we are becoming different kinds of human beings with different kinds of behaviours. Go back to the child who tries to swipe at a magazine believing it's an iPad--this is merely a model of how human behaviour is shifting. And this is where I tend to side with the dystopian scenarios when I think about the future of artificial intelligence.

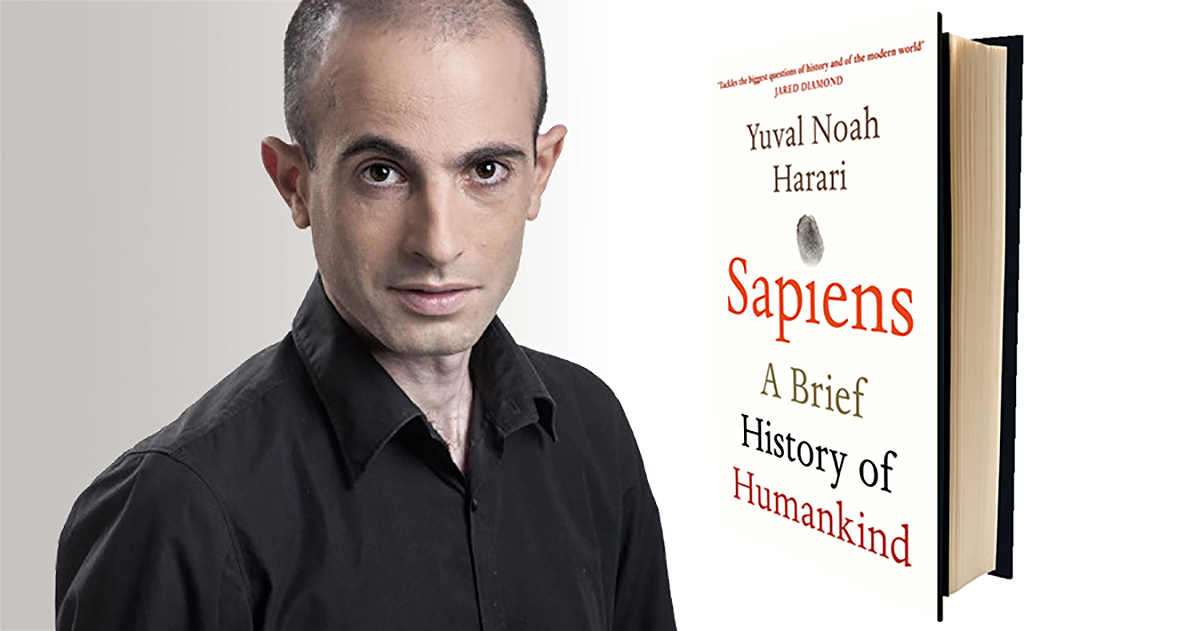

I read and research a lot of books. Nevertheless, there are certain books that simply slip my awareness that are touted as monumental. One that has recently flown under the radar is Yuval Noah Harari's work on the history of the human race and the emergence of AI technology. His first book Sapiens was lauded by Bill Gates, and put on Mark Zuckerberg's personal booklist for 26 million people to read. And his forthcoming book Homo Deus: A Brief History Of Tomorrow offers a glimpse into the future of humanity--and it doesn't look good. A fundamental question he asks in the book is how do we protect our world from destruction by our own hands?

In a recent article in the Guardian, Harari claimed that the human species really hasn't changed much over 10,000 years, but the rise of AI and cyborgs (the merging of humans and machines--also called the Singularity) is about to change all that. And the problem is only a handful of people have a vision for these technologies and how they will benefit or hinder humankind.

Harari's argument is based on the unintended consequences scenario: that we have no idea what these technologies will do, and thus may very well be creating our own demise. He also claims that the rise of AI and cyborgs will push homo sapiens into extinction and replace with a bio-engineered post-human capable of living forever. And I agree with him. In another recent article, scientists are coming out with predictions that robots will put lawyers, accountants, and doctors out of business; and if Harari and others are correct about the emergence of artificial intelligence, this is highly plausible.

I've written about these issues before. It's important that we understand where the world is going and how technology, the engines of our creation, are changing us. Education isn't a relieving answer to all of this simply because we can't possibly know what jobs will be available 20 years from now, and thus everything we're teaching children may already be obsolete. How do we prepare for this future?

When dealing with an addiction or emotional problems or issues, it's important to name it; for once it's named, you can start to deal with it. What do we name this period of human history when robots remain these endearing engines of creation; when we're just at the cusp of the exposing of AI and the great disruptive shift to a new economy? How do we name our issue? Hubris? The White Swan? The importance is admitting we have an issue, and then finding ways to correct it. But for Harari, and others like Elon Musk, it could be too late--then what?

No comments:

Post a Comment